Predictive model using Support Vector Machine (SVM)

Notebook 6 Predictive model using Support Vector Machine (svm).

Support vector machines (SVMs) learning algorithm will be used to build the predictive model. SVMs are one of the most popular classification algorithms, and have an elegant way of transforming nonlinear data so that one can use a linear algorithm to fit a linear model to the data (Cortes and Vapnik 1995).

Kernelized support vector machines are powerful models and perform well on a variety of datasets.

SVMs allow for complex decision boundaries, even if the data has only a few features.

They work well on low-dimensional and high-dimensional data (i.e., few and many features), but don’t scale very well with the number of samples.

Running an SVM on data with up to 10,000 samples might work well, but working with datasets of size 100,000 or more can become challenging in terms of runtime and memory usage.

SVMs requires careful preprocessing of the data and tuning of the parameters. This is why, these days, most people instead use tree-based models such as random forests or gradient boosting (which require little or no preprocessing) in many applications.

SVM models are hard to inspect; it can be difficult to understand why a particular prediction was made, and it might be tricky to explain the model to a nonexpert.

Important Parameters

The important parameters in kernel SVMs are the

Regularization parameter C,

The choice of the kernel,(linear, radial basis function(RBF) or polynomial)

Kernel-specific parameters.

gamma and C both control the complexity of the model, with large values in either resulting in a more complex model. Therefore, good settings for the two parameters are usually strongly correlated, and C and gamma should be adjusted together.

Loading essential libraries

%matplotlib inline

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

from scipy.stats import norm

## Supervised learning.

from sklearn.preprocessing import StandardScaler

from sklearn.preprocessing import LabelEncoder

from sklearn.model_selection import train_test_split

from sklearn.svm import SVC

from sklearn.model_selection import cross_val_score

from sklearn.pipeline import make_pipeline

from sklearn.metrics import confusion_matrix

from sklearn import metrics, preprocessing

from sklearn.metrics import classification_report

# visualization

import seaborn as sns

plt.style.use('fivethirtyeight')

sns.set_style("white")

plt.rcParams['figure.figsize'] = (8, 4)

#plt.rcParams['axes.titlesize'] = 'large'

Importing data

'''

# raw data

data_df = pd.read_table('./dataset/MergeData.tsv', sep="\t", index_col=0)

data = data_df.reset_index(drop=True)

data.head()

# CLR-transformed data

data_df = pd.read_table('./dataset/MergeData_clr.tsv', sep="\t", index_col=0)

data = data_df.reset_index(drop=True)

data.head()

'''

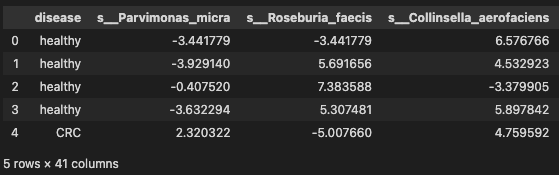

# significant species

data_df = pd.read_table('./dataset/MergeData_clr_signif.tsv', sep="\t", index_col=0)

data = data_df.reset_index(drop=True)

data.head()

Transformation

# Assign predictors to a variable of ndarray (matrix) type

array = data.values

X = array[:, 1:data.shape[1]] # features

y = array[:, 0]

# transform the class labels from their original string representation (CRC and healthy) into integers

le = LabelEncoder()

y = le.fit_transform(y)

# Normalize the data (center around 0 and scale to remove the variance).

scaler = StandardScaler()

Xs = scaler.fit_transform(X)

Classification with cross-validation

As discussed in notebook Pre-Processing the data splitting the data into test and training sets is crucial to avoid overfitting. This allows generalization of real, previously-unseen data. Cross-validation extends this idea further. Instead of having a single train/test split, we specify so-called folds so that the data is divided into similarly-sized folds.

Training occurs by taking all folds except one – referred to as the holdout sample.

On the completion of the training, you test the performance of your fitted model using the holdout sample.

The holdout sample is then thrown back with the rest of the other folds, and a different fold is pulled out as the new holdout sample.

Training is repeated again with the remaining folds and we measure performance using the holdout sample. This process is repeated until each fold has had a chance to be a test or holdout sample.

The expected performance of the classifier, called cross-validation error, is then simply an average of error rates computed on each holdout sample.

This process is demonstrated by first performing a standard train/test split, and then computing cross-validation error.

# Divide records in training and testing sets.

X_train, X_test, y_train, y_test = train_test_split(Xs, y, test_size=0.3, random_state=2, stratify=y)

# Create an SVM classifier and train it on 70% of the data set.

clf = SVC(probability=True)

clf.fit(X_train, y_train)

# Analyze accuracy of predictions on 30% of the holdout test sample.

classifier_score = clf.score(X_test, y_test)

print('\nThe classifier accuracy score is {:03.2f}\n'.format(classifier_score))

The classifier accuracy score is 0.66

- cross-validation

To get a better measure of prediction accuracy (which you can use as a proxy for “goodness of fit” of the model), you can successively split the data into folds that you will use for training and testing:

# Get average of 5-fold cross-validation score using an SVC estimator.

n_folds = 5

cv_error = np.average(cross_val_score(SVC(), Xs, y, cv=n_folds))

print('\nThe {}-fold cross-validation accuracy score for this classifier is {:.2f}\n'.format(n_folds, cv_error))

The 5-fold cross-validation accuracy score for this classifier is 0.70

The above evaluations were based on using the entire set of features. You will now employ the correlation-based feature selection strategy to assess the effect of using 40 features which have the best correlation with the class labels.

from sklearn.feature_selection import SelectKBest, f_regression

clf2 = make_pipeline(SelectKBest(f_regression, k=40), SVC(probability=True))

scores = cross_val_score(clf2, Xs, y, cv=5)

# Get average of 5-fold cross-validation score using an SVC estimator.

n_folds = 5

cv_error = np.average(cross_val_score(SVC(), Xs, y, cv=n_folds))

print('\nThe {}-fold cross-validation accuracy score for this classifier is {:.2f}\n'.format(n_folds, cv_error))

The 5-fold cross-validation accuracy score for this classifier is 0.70

print(scores)

avg = (100*np.mean(scores), 100*np.std(scores)/np.sqrt(scores.shape[0]))

print("Average score and uncertainty: (%.2f +- %.3f)%%"%avg)

[0.57425743 0.64356436 0.6039604 0.56435644 0.68 ]

Average score and uncertainty: (61.32 +- 1.936)%

From the above results, you can see that only a fraction of the features are required to build a model that performs similarly to models based on using the entire set of features. Feature selection is an important part of the model-building process that you must always pay particular attention to. The details are beyond the scope of this notebook. In the rest of the analysis, you will continue using the entire set of features.

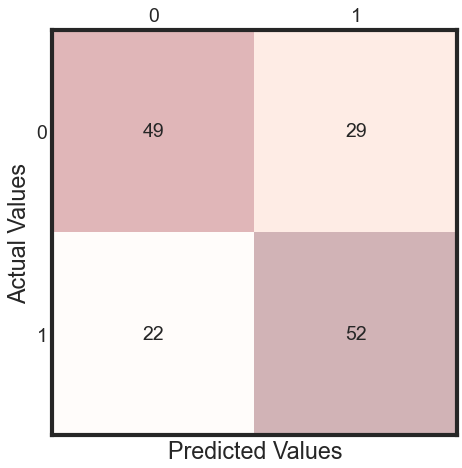

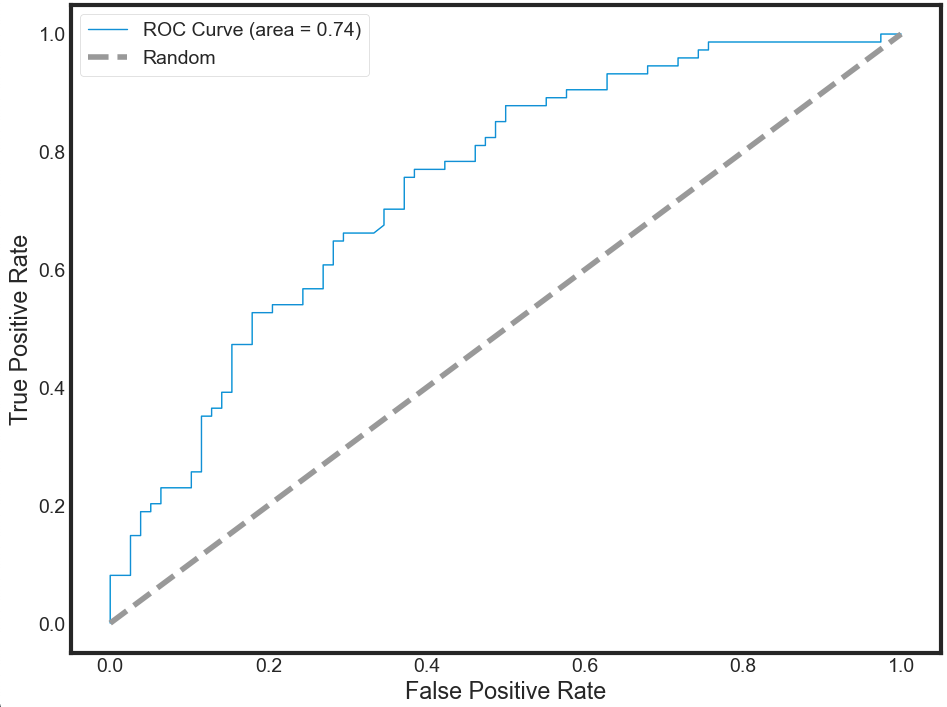

Model Accuracy: Receiver Operating Characteristic (ROC) curve

In statistical modeling and machine learning, a commonly-reported performance measure of model accuracy for binary classification problems is Area Under the Curve (AUC).

To understand what information the ROC curve conveys, consider the so-called confusion matrix that essentially is a two-dimensional table where the classifier model is on one axis (vertical), and ground truth is on the other (horizontal) axis, as shown below. Either of these axes can take two values (as depicted)

| Model says “+” | Model says “-” | |

|---|---|---|

True positive | False negative | ** Actual: “+” ** |

False positive | True negative | Actual: “-” |

In an ROC curve, you plot “True Positive Rate” on the Y-axis and “False Positive Rate” on the X-axis, where the values “true positive”, “false negative”, “false positive”, and “true negative” are events (or their probabilities) as described above. The rates are defined according to the following:

- True positive rate (or sensitivity)}: tpr = tp / (tp + fn)

- False positive rate: fpr = fp / (fp + tn)

- True negative rate (or specificity): tnr = tn / (fp + tn)

In all definitions, the denominator is a row margin in the above confusion matrix. Thus,one can express

- the true positive rate (tpr) as the probability that the model says “+” when the real value is indeed “+” (i.e., a conditional probability). However, this does not tell you how likely you are to be correct when calling “+” (i.e., the probability of a true positive, conditioned on the test result being “+”).

# The confusion matrix helps visualize the performance of the algorithm.

y_pred = clf.fit(X_train, y_train).predict(X_test)

cm = metrics.confusion_matrix(y_test, y_pred)

print(cm)

[[49 29]

[22 52]]

- plotting confusion matrix

%matplotlib inline

import matplotlib.pyplot as plt

from IPython.display import Image, display

fig, ax = plt.subplots(figsize=(5, 5))

ax.matshow(cm, cmap=plt.cm.Reds, alpha=0.3)

for i in range(cm.shape[0]):

for j in range(cm.shape[1]):

ax.text(x=j, y=i,

s=cm[i, j],

va='center', ha='center')

plt.xlabel('Predicted Values', )

plt.ylabel('Actual Values')

plt.show()

print(classification_report(y_test, y_pred))

precision recall f1-score support

0 0.69 0.63 0.66 78

1 0.64 0.70 0.67 74

accuracy 0.66 152

macro avg 0.67 0.67 0.66 152

weighted avg 0.67 0.66 0.66 152

Observation

There are two possible predicted classes: “1” and “0”. CRC = 1 and healthy = 0.

The classifier made a total of 148 predictions.

Out of those 148 cases, the classifier predicted “yes” 27 times, and “no” 37 times.

In reality, 30 patients in the sample have the disease, and 34 patients do not.

Rates as computed from the confusion matrix

Accuracy: Overall, how often is the classifier correct?

- (TP+TN)/total = (14+21)/64 = 0.55

Misclassification Rate: Overall, how often is it wrong?

- (FP+FN)/total = (13+16)/64 = 0.45 equivalent to 1 minus Accuracy also known as “Error Rate”

True Positive Rate: When it’s actually yes, how often does it predict 1?

- TP/actual yes = 14/30 = 0.47 also known as “Sensitivity” or “Recall”

False Positive Rate: When it’s actually 0, how often does it predict 1?

- FP/actual no = 16/37 = 0.43

Specificity: When it’s actually 0, how often does it predict 0? also know as true positive rate

- TN/actual no = 21/37 = 0.57 equivalent to 1 minus False Positive Rate

Precision: When it predicts 1, how often is it correct?

- TP/predicted yes = 14/27 = 0.52

Prevalence: How often does the yes condition actually occur in our sample?

- actual yes/total = 30/64 = 0.47

- plot ROC

from sklearn.metrics import roc_curve, auc

# Plot the receiver operating characteristic curve (ROC).

plt.figure(figsize=(10, 8))

probas_ = clf.predict_proba(X_test)

fpr, tpr, thresholds = roc_curve(y_test, probas_[:, 1])

roc_auc = auc(fpr, tpr)

plt.plot(fpr, tpr, lw=1, label='ROC Curve (area = %0.2f)' % (roc_auc))

plt.plot([0, 1], [0, 1], '--', color=(0.6, 0.6, 0.6), label='Random')

plt.xlim([-0.05, 1.05])

plt.ylim([-0.05, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.legend(loc='best')

plt.show()

To interpret the ROC correctly, consider what the points that lie along the diagonal represent. For these situations, there is an equal chance of “+” and “-” happening. Therefore, this is not that different from making a prediction by tossing of an unbiased coin. Put simply, the classification model is random.

For the points above the diagonal, tpr > fpr, and the model says that you are in a zone where you are performing better than random. For example, assume tpr = 0.99 and fpr = 0.01, Then, the probability of being in the true positive group is $(0.99 / (0.99 + 0.01)) = 99%$. Furthermore, holding fpr constant, it is easy to see that the more vertically above the diagonal you are positioned, the better the classification model.

Reference

- Cortes, Corinna, and Vladimir Vapnik. 1995. “Support-Vector Networks.” Machine Learning 20: 273. Accessed September 3, 2016. doi: 10.1023/A:1022627411411.

- Breast-cancer-risk-prediction